1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

| import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import DataLoader

from torch.autograd import Variable

EPOCH = 5

BATCH_SIZE = 100

LR = 0.001

DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

'''

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

(0.1307,), (0.3081,))

])

'''

train_data = torchvision.datasets.MNIST(

root='./data/',

train=True,

transform=transforms.ToTensor(),

download=False

)

test_data = torchvision.datasets.MNIST(

root='./data/',

train=False,

transform=transforms.ToTensor(),

download=False

)

train_loader = DataLoader(

dataset=train_data,

batch_size=BATCH_SIZE,

shuffle=True

)

test_loader = DataLoader(

dataset=test_data,

batch_size=BATCH_SIZE,

shuffle=False

)

'''

# 查看数据(可视化数据)

def datashow(train_loader):

images, label = next(iter(train_loader))

images_example = torchvision.utils.make_grid(images)

images_example = images_example.numpy().transpose(1,2,0) # 将图像的通道值置换到最后的维度,符合图像的格式

mean = [0.5,0.5,0.5]

std = [0.5,0.5,0.5]

images_example = images_example * std + mean

print(labels)

plt.imshow(images_example )

plt.show()

'''

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 6, 3, 1, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv2(x)), 2)

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x):

size = x.size()[1:]

num_features = 1

for s in size:

num_features *= s

return num_features

net = Net().to(device=DEVICE)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=LR)

train_counter = []

train_losses = []

train_accs = []

test_losses = []

test_counter = [i*len(train_loader.dataset) for i in range(EPOCH)]

def train(epoch):

for i, data in enumerate(train_loader, 0):

inputs, labels = data

inputs, labels = Variable(inputs).cuda(), Variable(labels).cuda()

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

if i % 100 == 99:

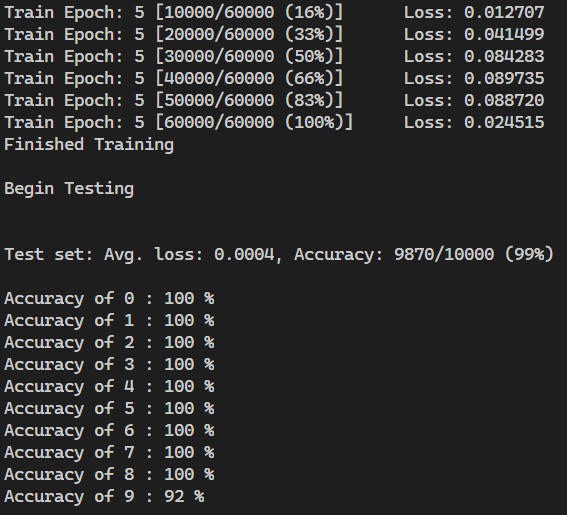

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, (i+1) * len(inputs), len(train_loader.dataset),

100. * i / len(train_loader), loss.item()))

train_losses.append(loss.item())

train_counter.append((i*BATCH_SIZE) + ((epoch-1)*len(train_loader.dataset)))

correct = 0

total = 0

_, predicted = torch.max(outputs.data, 1)

total = labels.size(0)

correct = (predicted == labels).sum().item()

train_accs.append(100*correct/total)

print('Finished Training')

def test():

print('\n'+"Begin Testing"+'\n')

net.eval()

correct = 0

total = 0

test_loss = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images, labels = Variable(images).cuda(), Variable(labels).cuda()

outputs = net(images)

loss = criterion(outputs, labels)

test_loss += loss.item()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

test_loss /= total

test_losses.append(test_loss)

print('Test set: Avg. loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, total,

100. * correct / total))

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

with torch.no_grad():

for data in test_loader:

images, labels = data

images, labels = Variable(images).cuda(), Variable(labels).cuda()

outputs = net(images)

_, predicted = torch.max(outputs, 1)

c = (predicted == labels).squeeze()

for i in range(4):

label = labels[i]

class_correct[label] += c[i].item()

class_total[label] += 1

for i in range(10):

print('Accuracy of %s : %2d %%' % (i, 100 * class_correct[i] / class_total[i]))

for epoch in range(1, EPOCH + 1):

train(epoch)

test()

fig = plt.figure()

plt.plot(train_counter, train_losses, color='blue')

plt.scatter(test_counter, test_losses, color='red')

plt.legend(['Train Loss', 'Test Loss'], loc='upper right')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.show()

|